Artificial (lack of?) Intelligence, diverse ways of knowing and the possibility of co-creating better

Overcoming the wisdom gap in a world that's well and truly 'lost'

I’ve referred to wisdom as the “process of knowing, deeply caring for and living in close relation to what truly matters.”

For various reasons, I’ve also suggested we are suffering from a colossal wisdom deficit or gap.

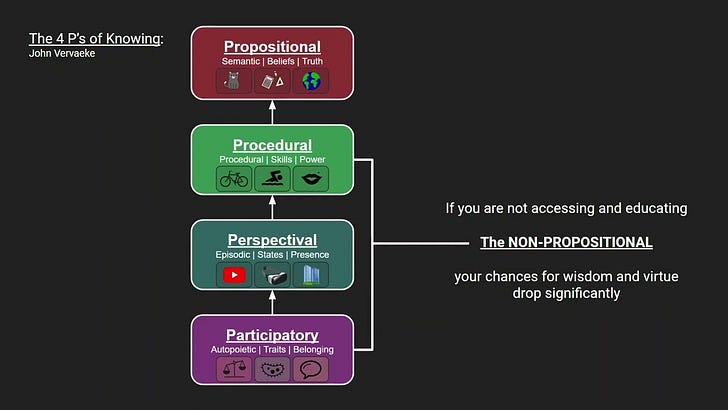

We’ve become obsessed with the propositional, which limits the depth, diversity and normative application of our knowledge. We’ve gotten wrapped up in stories about our atomisation or separateness, within the context of a meaningless and deterministic universe where we have no freedom of will or organismic agency. We’re battling with much of life, always trying to get the current task done in service of some future orientation, perhaps something like greater (financial) ‘wealth’ upon ‘retirement’. We’re barely able to ‘be’ with what is, most of our doing would be better off not being done, and there’s close to no time or energetic capacity for an integrous and ongoing process of becoming (developing self in relation to other and world, so that one might become a highly adaptive agent of and for the whole).

In short, we’re lost. We’re disconnected from what truly matters. We’re failing to match our intelligence and general problem solving abilities with the capacity to thoughtfully and sustainable and equitably coordinate the totality of human activities such that we give ourselves a real chance of thriving for millennia to come.

Sounds pretty ‘negative’, right?

That’s because it is.

But it’s not the only way of relating to our current predicament. It’s a frame, albeit one that I’m pretty darn confident has some directional truthfulness.

We are also, even amidst a rapidly destabilising world (arguably necessarily so), seeing the ongoing beauty, brilliance and possibility of care, ingenuity and collective action.

It was the best of times. It was the worst of times.

You get the drill.

That’s my preamble done. Short, sharp and bitter sweet.

But why am I writing this?

Well, you may have heard that AI is the bees knees. Or maybe it’s utter BS. But, perhaps it’s neither, both, less and so much more, all at the same time (🤯).

The thing is, at least in my experience, a lot of folks working in and around AI now, especially those in your everyday organisation (private or public), haven’t spent much time studying the historicity of AI. As a result, it’s very hard to situate current abilities and development trajectories in their environmental (big E here) context. It’s hard to have a decent sense of where, when and how to develop / use this ‘thing’, along with where, when and how not to develop / use this ‘thing’. It’s hard to push back against the AI arms race. It’s hard to say no. It’s hard to do otherwise. You end up stuck, doing more AI in this and that context, likely (at least for now) adding to your workload, frustration and growing moral dissonance.

Suboptimal people.

What I’d like to do is encourage a wee pause (Ha! Remember that pause letter shenanigans? Utter BS. Moving on!). The pause I refer to is more micro. It’s first at the level of you, the reader of this. Hopefully it can extend from there… We’ll see.

As part of this pause, I’d strongly encourage you to consider the history of AI, long before the formal work at Dartmouth kicked off in 57’. This paper will help, as will the images below.

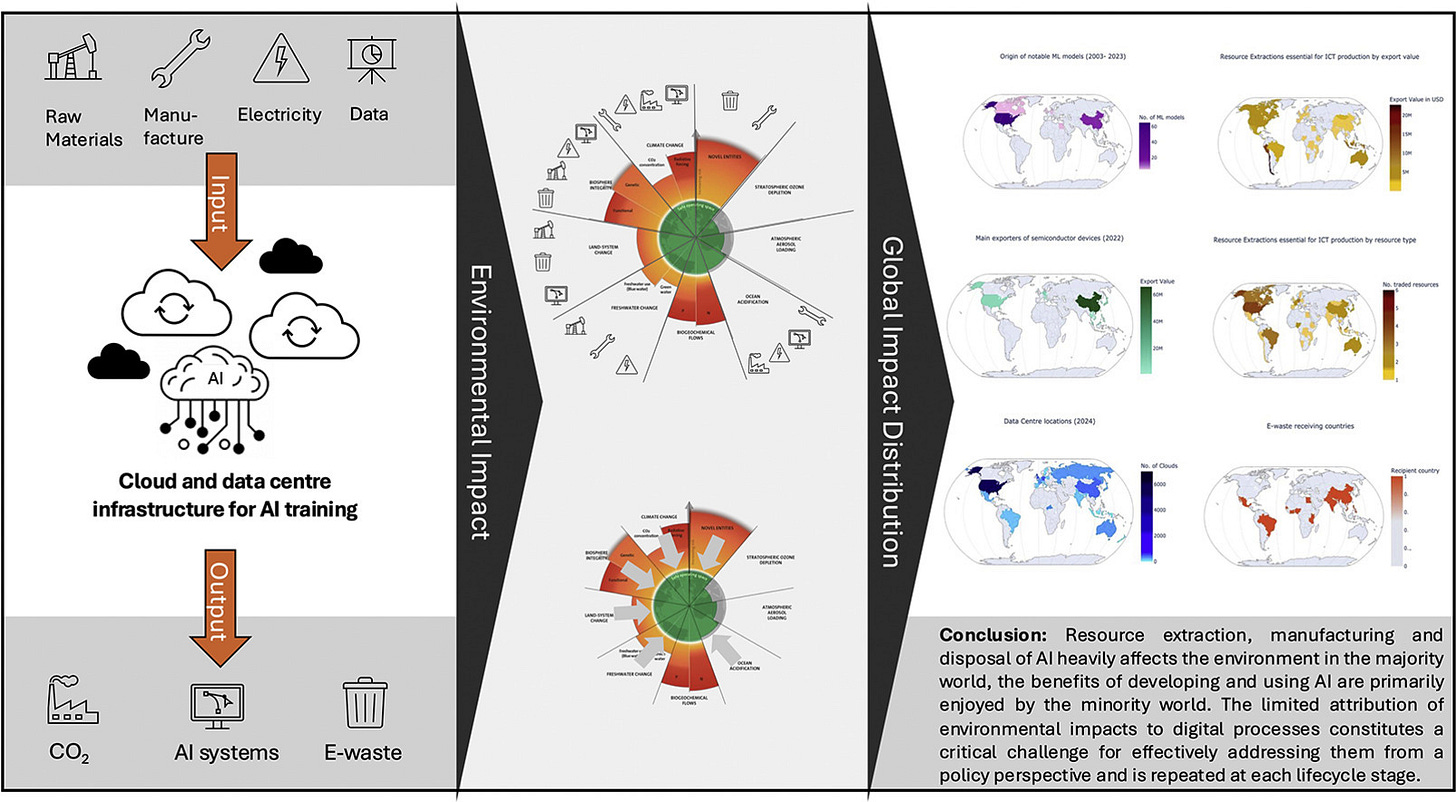

I’d also encourage you to consider the very material realities of AI, using the widest boundary thinking you can.

It may also be useful to explore some of the apparent differences between organisms and machines.

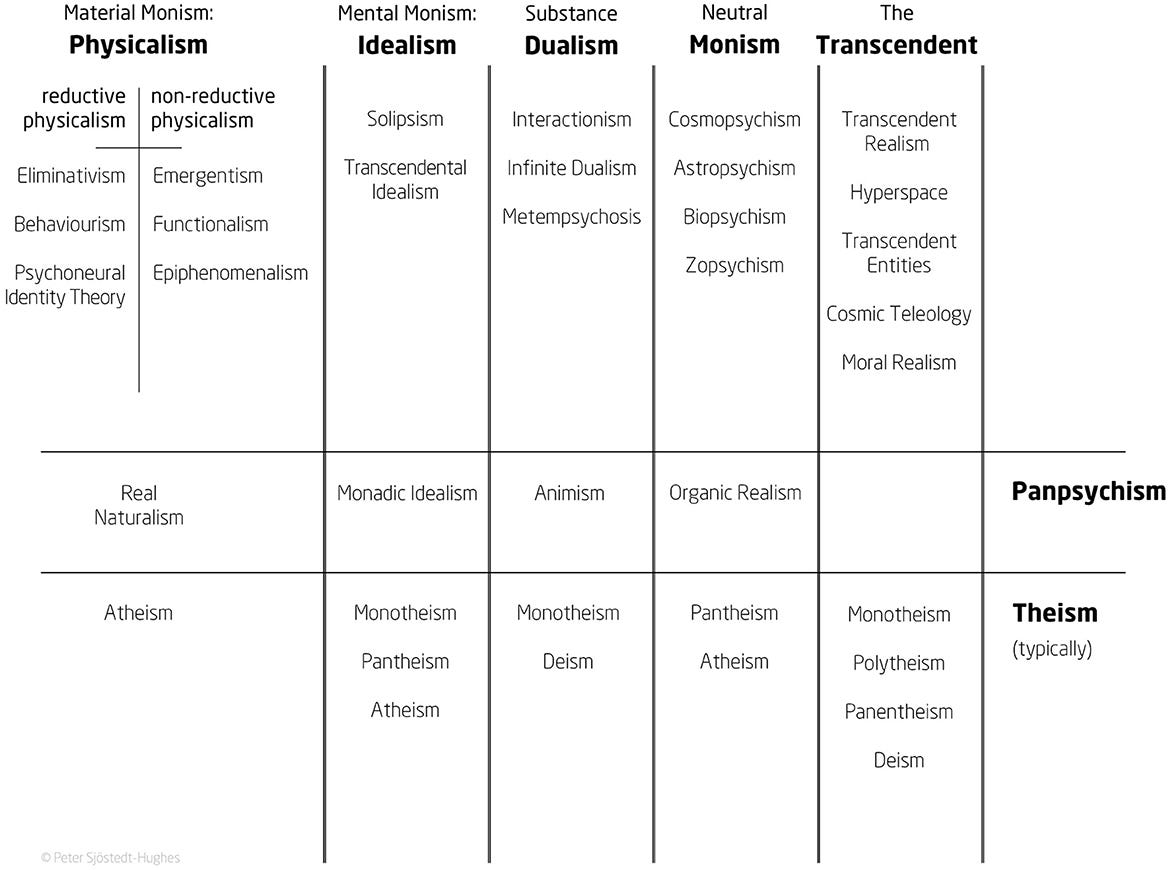

It may also be useful to introduce yourself to the landscape of theories in the Philosophy of Mind (image below is non exhaustive), particularly if you’re getting tempted to make metaphysical claims.

And last, but certainly not least, it is absolutely worth moving beyond the propositional (only), and becoming familiar with ‘lost ways of knowing’. This, as is argued in the video, seems like a critical path dependency for cultivating the collective wisdom that may help us go in, through and beyond the predicaments we face.

I speculate that if just a few more folks do this, especially those with some clout within the context of organisational AI development and use (again, public or private), we will start maturing and nuancing our discussions about not just what we can do, but what we should do.

And this, my friends, is what matters (doing what is most good and right because its most good and right).

There are many possible worlds we might create. The complex nature of causality in such a context is mind boggling, but let me simply say that the relation between our collective choices and actions will have a darn big impact on what becomes our future-present. We have real influence. Don’t forget that.

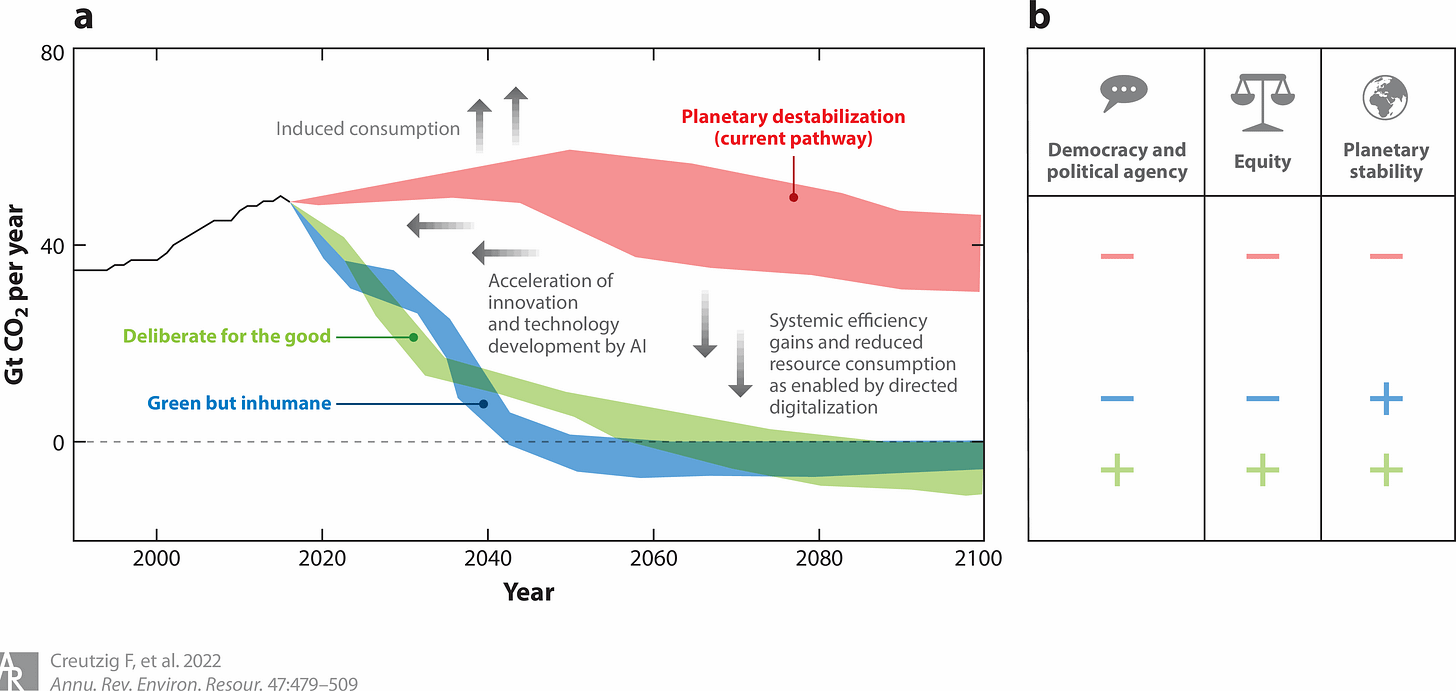

This process of sitting with, curiously exploring, engaging with many perspectives and asking the question, “Just because we can, should we?” has the potential to bring about wiser sociotechnological development that actually supports human and planetary needs (‘deliberate for the good’ in the model below). It can be part of our shared process of knowing, deeply caring for and living in closest relation to what truly matters.

Every ounce of energy we expend on such a collectively ambitious meta-exercise will pay off in some way.

So, what are we fecking waiting for, eh?

With love as always.