This short presentation is from yesterday’s Diversity and Inclusion in AI Symposium, organised by Dr. Rifat Ara Shams, Dr. Muneera Bano and Prof. Didar Zowghi.

The basic premise is that:

There is a significant gap between our ethical intentions and the way we behave (the ethical intent to action gap)

There are many reasons for this, ranging from the deep systemic to the everyday acute (the metacrisis pretty much sums this up)

Unfortunately, very few organisations are actually ‘doing ethics’ (engaging in systematic normative reflection). This is sometimes called ethicophobia, and again, results from a bunch of complex factors (what’s interesting, however, is that this creates a lot of moral dissonance for people within organisations. These people want to do good, they care about the future etc.)

When situated within a broader sociotechnical approach to ethical decision making, participatory ethics offers a range of benefits to organisations and society

Such approaches are crucial to enacting the values we express about diversity, equity and inclusion in AI development and use (and beyond)

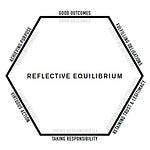

But this is no easy fix. It requires us to do much more, and much better, at a particular kind of work (pluralistically engaging in systematic normative reflection that verifiably effects our decisions, actions and the spectrum of consequences that follow)

Doing this will require collective moral courage

Not doing this will result in us failing to uphold our moral responsibilities

Not upholding our moral responsibilities, as is all too common today, will enhance the likelihood of catastrophic and existential risk (no, not from AI ‘waking up’ and eating us, but because of our inability to thoughtfully apply restraint, cultivate virtue and become a wiser civilisation)

So… Let’s get out of our own way and step up!

If you want any of the references, comment below.

With love as always.

Share this post